Athenomics - The Importance of NGS Sequencing Coverage and Throughput

The quality and reliability of NGS data depend heavily on two critical factors: sequencing coverage and throughput

Next-Generation Sequencing (NGS) has transformed genomics through high-throughput, cost-effective DNA and RNA analysis. However, the quality and reliability of NGS data rely heavily on two crucial metrics: sequencing coverage and throughput. Coverage reflects how thoroughly a genome is sequenced, while throughput defines the total data output from a sequencing run. Properly balancing these factors is essential for accurate variant detection, reliable research outcomes, and cost-effective experimentation.

1. What is Sequencing Coverage?

Sequencing coverage (or depth) refers to the average number of times each nucleotide in the genome is sequenced. For example, 30× coverage means that each base is read approximately 30 times, whereas 100× coverage provides greater confidence for detecting sequence variants.

Coverage plays a pivotal role in three main areas: accuracy, sensitivity, and reliability. Higher coverage reduces the likelihood of sequencing errors and PCR biases, improves the detection of rare variants such as tumor mutations or microbial strains, and ensures more consistent results in regions of the genome that are repetitive or complex.

| Application | Recommended Coverage | Reason |

|---|---|---|

| Whole Genome Sequencing (WGS) | 30x–50x | Balances cost and accuracy |

| Whole Exome Sequencing (WES) | 100x–150x | Focuses on coding regions |

| Cancer Genomics (Tumor-Normal) | 100x–300x | Detects low-frequency mutations |

| Metagenomics | 5x–20x (per species) | Identifies microbial composition |

| Single-Cell RNA-seq | 50,000–100,000 reads/cell | Captures transcriptome diversity |

Insufficient coverage raises the risk of false negatives and missing variants, while excessively high coverage yields diminishing returns and increased costs.

2. What is Throughput, and Why Does It Matter?

Throughput is defined as the amount of sequencing data generated per run, typically measured in gigabases (Gb), terabases (Tb), or number of reads. High throughput provides several advantages:

- Scalability: High-throughput systems like Illumina NovaSeq X or MGI DNBSEQ-T20 can process thousands of samples in parallel, making them ideal for population studies. In contrast, low-throughput platforms such as MiSeq or Nanopore MinION are best for smaller projects.

- Cost Efficiency: Increased throughput reduces the cost per gigabase. For instance, NovaSeq X can sequence a whole human genome for under $200, compared to over $1,000 using older technology.

- Turnaround Time: High-throughput sequencing can require days to weeks for data generation, but portable systems like MinION offer real-time data within hours.

3. Balancing Coverage and Throughput

Experimental design often requires trade-offs. High coverage with low throughput is best suited for small-scale, critical studies like rare disease diagnostics, while lower coverage with high throughput fits large-scale screenings, such as genome-wide association studies (GWAS) or microbial profiling.

Application-Based Optimization

- Human Whole-Genome Sequencing: 30x–50x coverage is standard for germline variants; 100x or higher may be needed for tumor somatic variants.

- Exome Sequencing: 100x–150x is typically reliable for coding variant detection.

- Metagenomics: 5x–20x per species is sufficient for most analyses.

- Single-Cell Sequencing: 50,000–100,000 reads per cell are recommended to adequately profile single-cell transcriptomes.

4. Challenges in Coverage and Throughput

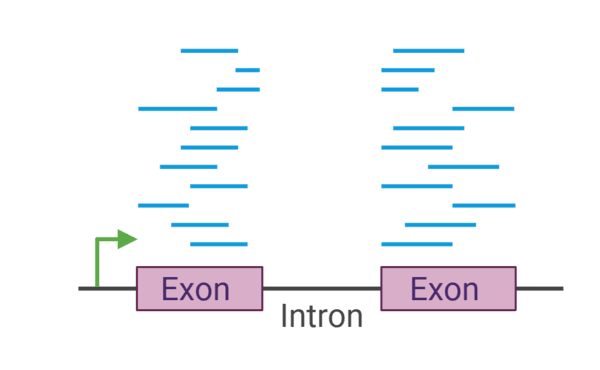

Uneven Coverage

Some genomic regions, like those rich in GC content or highly repetitive, are under-sequenced (coverage bias). Solutions include PCR-free library preparation and using a combination of short and long reads (hybrid sequencing).

High-Throughput Data Management

High-throughput experiments generate massive data volumes—a single NovaSeq X run can produce around 48 TB. Managing this requires cloud computing resources (e.g., AWS or Google Genomics) and efficient lossless compression formats such as CRAM.

Cost Versus Depth Dilemma

Ultra-deep sequencing, such as 1000x coverage for liquid biopsy studies, is prohibitively expensive. Alternatives include targeted sequencing panels that focus on specific genes of interest and the use of unique molecular identifiers (UMIs) to reduce sequencing noise.

Conclusion

Sequencing coverage and throughput form the backbone of trustworthy NGS results. Coverage determines accuracy and sensitivity, while throughput allows for scaling and cost savings. To achieve the best outcomes, tailor your experiment’s coverage to the application (for example, 30x for whole-genome and 100x for exome sequencing), and select a throughput that matches your project's scale (higher for large cohorts, lower for targeted or portable studies). Integrating short and long reads helps resolve complex genomes.

As technology advances, NGS will continue to become faster, cheaper, and more accessible, making deep genomics widely available.

Need help determining the optimal coverage and throughput for your NGS experiment? Contact our team for guidance on experimental design and best practices.